Tachyon supercharges Spark

Tachyon, a new caching file system part of the Berkeley Data Analytics Stack, created some buzz last week at Strata Santa Clara 2014. Introduced at the May, 2013 Spark Meetup, the August, 2013 AMP Camp 3 has the best overview currently available online in both video and slides:

(Slides from Strata are also available, but video seems not to be, since it was a "tutorial" and not a "presentation".)

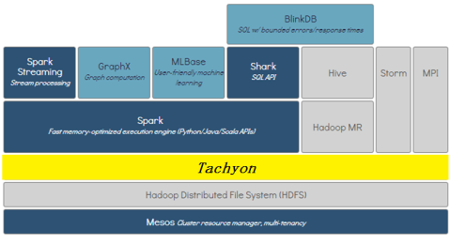

Tachyon speeds up Spark by caching data in RAM. But wait, you ask, doesn't Spark get its speed by storing everything in RAM; how can caching make that any faster (and wouldn't it just slow it down since it just adds another layer)? The diagram below, from Haoyuan Li's August, 2013 presentation, shows that extra layer:

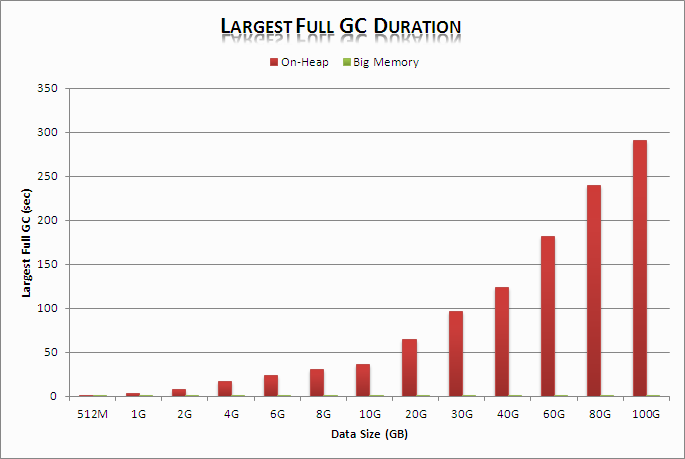

The reason is due to the JVM garbage collection performance penalty. As shown in the chart below from performanceterracotta.blogspot.com, once the heap reaches 100GB, the JVM can simply just pause for 5 minutes at a time doing garbage collection. Extrapolating out to a 1TB RAM system, a node could just pause for up at an hour at a time for garbage collection!

Tachyon, even though it is written in Java, avoids GC performance problems because it uses hardly any heap at all. Instead, it leverages C-language-based RAM disk filesystems that pre-exist on Linux systems to cache data. So when Spark persists out an RDD to disk, when Tachyon is in place, Spark is really persisting it to Tachyon. Tachyon handles redundancy and resiliency by, in a manner similar to Spark itself, keeping track of data computation "lineage" and recomputing results in case of failure (assumptions are that data sets are immutable and processes are deterministic).

The result is that, yes, for tiny datasets, Tachyon is slower than pure Spark because it's adding another layer of indirection. But for any reasonably large data size for any reasonable running-time length, Tachyon provides a 2-8x speedup.

As all the Tachyon slide decks say, "RAM is King". Thus, I propose a new metric: TB RAM/rack. The highest density I've been able to find is the SuperMicro FatTwin F617R2-RT+. It squeezes in 8TB RAM and 128 cores (8 sets of Dual 8-Core Xeons) in 4U. In a 42U rack, that's 80TB and 1,280 cores.

(Of course that's not cheap, as to achieve 1TB per node, high-capacity 32GB DIMMs must be used, which are $30/GB, compared to $10/GB for the more common 8GB DIMMs.)

Previously, Big Data had been focused on hard drive density. E.g. the slowest, archival, tier in a storage system might use the maximal density 45drives.com. Using 6TB drives, that's 2.7PB/rack.

But with the power of Tachyon-charged Spark, the Big Compute I blogged about a couple of months ago has arrived, and it's time to start talking about RAM density, not just hard drive density. Sadly, at the moment, any kind of RAM density takes us out of realm of commodity hardware, which typically tops out at 64GB per node.