Four Approaches to Artificial General Intelligence

Artificial General Intelligence (AGI), also known as Strong AI, distinguishes itself from the more general term AI by specifically having its a goal human-level or greater intelligence. There have been many attempts to achieve it. Pei Wang's page Artificial General Intelligence -- A Gentle Introduction has the best curated set of links. Various people have categorized the numerous approaches in various ways, but here is my categorization:

Neural

The neural approaches, sometimes called "connectionist", try to imitate the human brain in someway.

1. Neuroscience

The neural approaches inspired by neuroscience try to faithfully reproduce the human brain. My post Neuromorphic vs. Neural Net explains the differences between neuromorphic approaches with artificial neural networks.

2. Artificial Neural Network

Artificial Neural Networks, while originally inspired by the human brain in the 1950s, now continue to refer to that basic original architecture and no longer have an explicit goal of accurately modeling the human brain.

Most promising in this category are Neural Turing Machines (such as being commercialized by DeepMind, acquired by Google). NTMs add internal state to conventional neural networks.

Machine learning, especially neural networks, have been dominating headlines to the point of eclipsing the rest of AI, such as historic symbolic AI described below. The downside of neural networks is their opacity -- they are "black boxes" and there is no way to infuse human-curated knowledge directly.

3. Symbolic

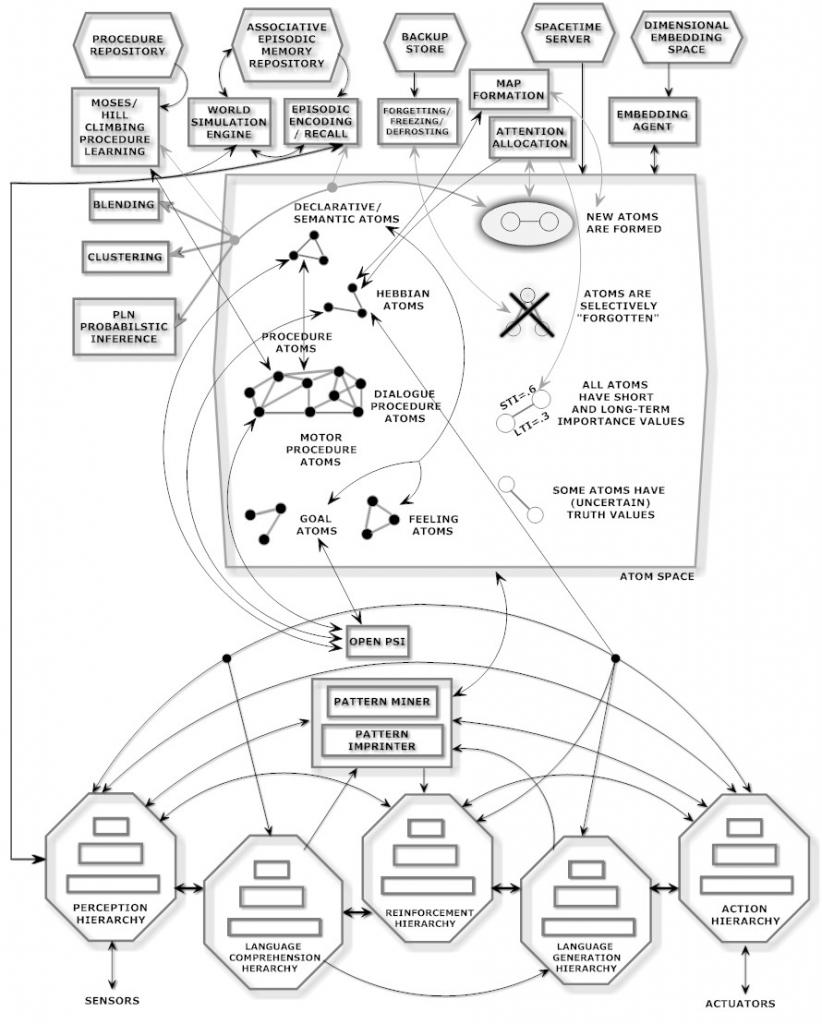

The Cyc project is still going on ever since 1984 to encode all of human common sense into a massive graph. A more recent, more comprehensive, and more ambitious project is OpenCog, the architecture of which is illustrated at the top. OpenCog uses hypergraphs (graphs where edges touch more than two vertices), probabilistic reasoning, and a variety of specialized knowledge stores and algorithms.

4. Neural/Symbolic Integration

Why settle for either the black box but adaptive approach of neural networks or the white box but less flexible symbolic approaches, when you can have both? It's quite a challenge and there have been a few different projects meeting various levels of success in various domains. LIDA is one example of this genre.

UPDATE: An important member of the fourth category is that OpenCog is in the process of incorporating DeSTIN, which does deep learning using Bayesian inference. The integration was first proposed in the 2012 paper Perception Processing for General Intelligence: Bridging the Symbolic/Subsymbolic Gap and work is still proceeding according to the OpenCog website.